Artificial Intelligence makes mental health support more accessible - but at what ethical cost?

and live on Freeview channel 276

In November 2022, we got Chat GPT. It took the world by storm simply because it made Artificial Intelligence (AI) more accessible to the public whilst giving an insight into the vast capabilities AI can offer.

Whilst it threw up a range of criticisms, such as it being used to cheat on exams, to complete students' homework and question on whether it will overhaul the way we perceive art and film, it was created as a tool to help humans.

Advertisement

Hide AdAdvertisement

Hide AdThe last few years have seen waves of Covid-19, a struggling NHS, borderline recession and a huge mental health crisis. But the question is can AI be an aid to mental health - and is it a more accessible and quicker way to help relieve some of the load for mental health professionals?

Can AI navigate mental health?

Mental health navigates our lives. When in a low mood - we look for ways to make ourselves better. When we're happy, we like to share it but arguably our emotions and our fluctuating health are what makes us human - so how can an AI help with that?

Wysa is an AI chatbot which has been designed to help people who are experiencing low mood, stress or anxiety to build tools and techniques to help encourage them to maintain their mental well-being in a self-help context.

Megha Gupta, of Wsya, said that "Wysa’s AI is both listening and therapeutic." The listening stage sees the AI bot prompt with open-ended questions that can help the person reflect and explore their thoughts and feelings.

Advertisement

Hide AdAdvertisement

Hide AdWysa uses CBT techniques and over 100 natural language understanding (NLU) AI models that use free text. Gupta expands: "Unlike ChatGPT, the responses Wysa provides are not AI-generated. Instead, Wysa uses a clinician-approved rule engine that allows it to respond intelligently and appropriately while maintaining clinical safety."

Having an AI bot work alongside human care practitioners can help relieve some burden on the NHS and other health services. "Wysa is always there, even at 4 am. A human therapist simply can’t be,” Gupta says.

“Users talk freely about their problems without the fear of judgement, and can be open and honest about what’s going on." Wysa’s research shows that people are likely to open up to AI, and says that whilst an AI bot cannot replace human therapists for clinical cases, it can provide support to sub-clinical users.

Gupta says: "Digital mental health technology has the potential to help address the mental health crisis by providing people with access to affordable, convenient, and effective mental health care. However, its developers need to ensure that the user’s clinical safety and privacy are never compromised."

Advertisement

Hide AdAdvertisement

Hide Ad

How accessible is it?

Due to the sheer fact an AI bot doesn't need to rest, or eat, accessibility is open 24/7. But it also helps relieve a workload for tasks that need human intervention, and so can help bridge gaps in the healthcare provision.

This makes it accessible as the ability to have a digital option on a virtual platform helps address an "essential part of inclusivity and equity in access. For instance, individuals belonging to rural communities, or shift workers, may not have access to mental health facilities at times or locations that suit their needs."

Dr Tim Althoff, assistant professor of Computer Science at the University of Washington, walked me through over Zoom how an AI bot works when delivering mental care help, in an bot which was created with Mental Health America. Firstly, the client would write down what their initial thoughts are; for example, Dr Althoff (a researcher) wrote "I'll never achieve success as a researcher". Then, next, they will be prompted to elaborate on what led to this thought to provide context and personalisation.

The next stage is where AI enters and "starts you off with multiple possible (thought) reframes. We use multiple to make sure that every single person using this tool will have at least one they can relate to." The idea is then to look at the alternative thought reframes offered by the AI and to apply them when similar thoughts occur. However, with this model, there is also an option to work with the AI and create your own reframes using the AI as prompts.

Advertisement

Hide AdAdvertisement

Hide AdDr Althoff says this tool has been used by over 50,000 people and is completely accessible to the public, and can be accessed here. Accessibility is a key component of Dr Althoff's work. He says: "I think most important to me is that there's a future of mental health that looks different from the present." He explains a lot of people are suffering and does not have access to adequate mental health support and is highly supportive of any approaches that get us there. He says: "As an AI researcher, I think this is one of the most meaningful things I believe I could do."

Can an AI bot help with empathy?

Dr Althoff suggests AI in the healthcare system could soon become cost-effective, making it an easier, more accessible, and cheaper option for people to employ. But where does it stand on the very human emotion of empathy?

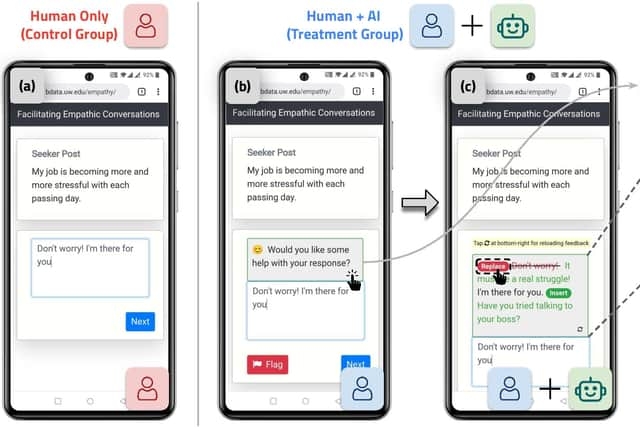

Naturally, an AI cannot display empathy - but what it can do is help someone who wants to display empathy "find the right words to express empathy effectively". Dr Althoff explains by showing me an example from a peer support platform, TalkLife. He says: "We saw that empathy was quite rare and that there were a lot of missed opportunities....a lot of people, even if they mean well, often jump to telling the person what to do."

In this scenario, Dr Althoff writes ‘Don't worry, I'm here for you’ which is flagged by the AI. The system underlines 'Don't Worry' and suggests replacing it with ‘It must be a real struggle’ which is more validating, but keeps the ‘I'm here for you’ - the idea is to make minimal changes and only alter the aspects where more empathy can be injected into the sentence.

Advertisement

Hide AdAdvertisement

Hide AdThey found that in their study to test the AI, people preferred the AI collaboration, even after the participants were given traditional empathy training. But what was more exciting for the researchers, is that for people working on TalkLife, 69% of them felt more confident in writing supporting responses after the study.

Ethics and safety of AI

One of the aspects Dr Althoff stressed to NationalWorld of combining AI with mental health was the safety and ethical side. He said: "It's absolutely critical that these systems are safe to use. It is really important for us to understand the potential risks to be able to identify them, describe them, mitigate and prevent them."

To help mitigate them, researchers often collaborate with clinical psychologists, and to reduce risk by intervening with the peer supporter rather than the person in crisis so adjustments can be made by the peer supporter or mental health professional. Right now, AI also works in a niche scope, and human connection has to come first. There are even options for supporters to flag any inappropriate suggestions from the AI which could potentially be harmful.

The future of AI and mental health is a growing market, with the hope that it can be a cost-effective, non-discriminatory and accessible aid for the mental health crisis across the world - and so far, it seems to be working.

Comment Guidelines

National World encourages reader discussion on our stories. User feedback, insights and back-and-forth exchanges add a rich layer of context to reporting. Please review our Community Guidelines before commenting.